Almost as unhesitating as ChatGPT meandered into the mainstream, so too did a profusion of tools deadset on dispelling any doubts about who or what produced a piece of writing. Simply copy some text, paste it into an AI detection tool, press a button and out comes its verdict — with varying degrees of detail, depending on the tool.

Some AI detectors also serve particular or specialized use cases, like those built specifically for education or SEO. But no matter the application, the goal is always similar enough: To analyze whether a work was AI-generated or not.

For a long while (and maybe even still), these tools didn’t appear to work as advertised — at least not with a degree of correctness you could reasonably rely on. But that’s what I hope to answer here:

- How do AI detection tools work?

- Are they any more accurate now than they were when they first came out?

- Which ones are worth their salt?

- And, can/should you trust them in a professional capacity?

Let’s find out.

Subscribe to the ai marketer

Weekly updates on all things ai in marketing.

What Are AI Detectors and How Do They Work?

At a high level, AI detectors all aim to answer the same question: Does this text look like something a machine would write? The difference lies in how confidently they claim to answer it, as well as how much nuance they’re willing to admit along the way.

What They Promise

Most artificial intelligence detection tools market themselves as a way to quickly and reliably identify AI-generated content. Sometimes their verdict is framed as a binary (“human” vs. “AI”), though many tools use many different detection methods. Some claim to provide a level of transparency or sophistication beyond a simple yes-or-no answer, highlighting confidence scoring, probability ranges or sentence-level analysis.

Others promise use-case-specific accuracy, positioning themselves as particularly effective for classrooms — like Turnitin — editorial workflows or SEO quality control.

How AI Detection Works

Most advanced AI detectors use machine learning models (MLM) and natural language processing (NLP) to pick up on common linguistic patterns, specifically, things like burstiness and perplexity. Here’s what those terms mean:

Perplexity measures how predictable a piece of text is to AI models:

- Low perplexity: The text is very predictable, i,e., uses common words, familiar phrasing, standard sentence structures. Low perplexity can be an indicator of AI-generated or heavily optimized SEO content.

- High perplexity: The text is less predictable, i.e., uses more original phrasing, unusual word choices or more complex sentence structure. This is more typical of human writing, especially creative work.

Burstiness describes how much variation there is in sentence length, structure and complexity.

- Low burstiness: Sentences are similar in length and rhythm. This is common in AI writing, which tends to be smooth and consistent.

- High burstiness: Short, punchy sentences mixed with longer, more complex ones. This is more common in human writing and engaging storytelling.

AI-generated text tends toward consistency and predictability, which technically reads as polished in its finished form, but still has a distinct cryptographic character that feels too robotic. Human-written content, on the other hand, is often as unique and varied as the person writing it, especially in its more creative forms. That means plenty of character, voice, higher burstiness and higher perplexity that are uncommon in current popular generative AI algorithms.

Measuring predictability means determining how likely one word is to follow another based on probability. Large language models are designed to choose the most statistically likely next word; detectors look for that same behavior in reverse. Text — even human text — that consistently follows highly predictable patterns may prompt an alert.

Some tools go a step further by comparing a submitted piece of content against a large dataset of AI-generated and human-written text. From there, they assign probability scores based on that training data rather than absolute judgments.

Instead of declaring a piece of writing “AI-generated,” detectors typically flag passages that resemble linguistic patterns common to AI writing tools, then aggregate those signals into an overall assessment. The result is less a verdict than it is a weighted guess.

As the technology continues to improve, it’s only until/if some type of watermarking or tagging technique comes around that we’ll be able to know with certainty what came from AI and what didn’t.

Are They Accurate?

Most people who’ve used AI detectors — or been accused of submitting AI-generated copy — would tell you no, they’re not very accurate. But what does science say?

Well, research actually says yes and no. One independent study compared 16 publicly available AI detectors, including well-known tools including Copyleaks, Turnitin and Originality.ai. Researchers fed each tool a set of GPT-3.5, GPT-4 and human-created documents, with the aforementioned three yielding results with “very high accuracy.” The 13 other tools could distinguish between GPT-3.5 papers and human-generated papers reasonably well, but were ineffective at distinguishing GPT-4 text and human writing.

Of course, we’re now onto GPT-5.2, so I imagine it’s only getting more difficult for AI detectors to perform well. That said, the research asserts that “Technological improvements in publicly available AI text generators are matched very quickly by improvements in the capabilities of the best AI text detectors.”

According to this research, and from voices I’ve heard around the internet, Turnitin is on top when it comes to accuracy; however, it has a very specific use case and is not available for general consumer use, leaving most folks with other options that show varying effectiveness and may be prone to providing false positives or false negatives.

What About Built-In Detectors in Your Favorite SEO Tools?

Brafton uses Ahrefs for some SEO and rank tracking, and recently, I happened to notice that they also offer a free, browser-based AI detection tool, so I decided to do a small test to see whether or not it (and others like it) are a decent option.

Obviously, this is a one-off test and not nearly as thorough or data-backed as the example above, but interesting and good to know nonetheless.

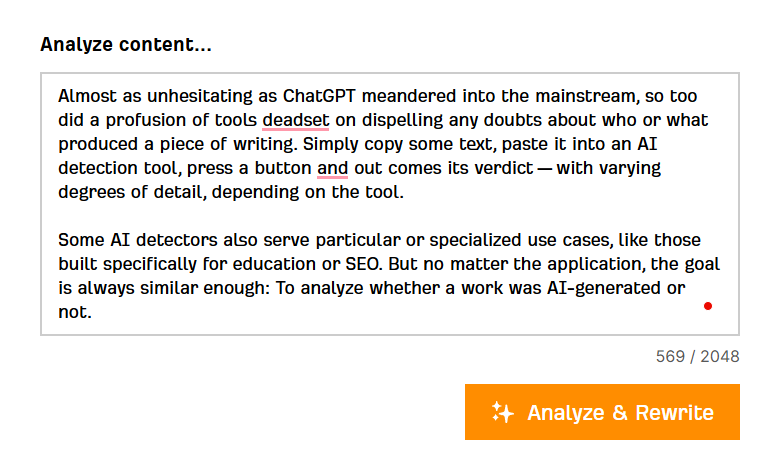

I pasted the intro from this blog into the tool, which I wrote myself the old-fashioned way:

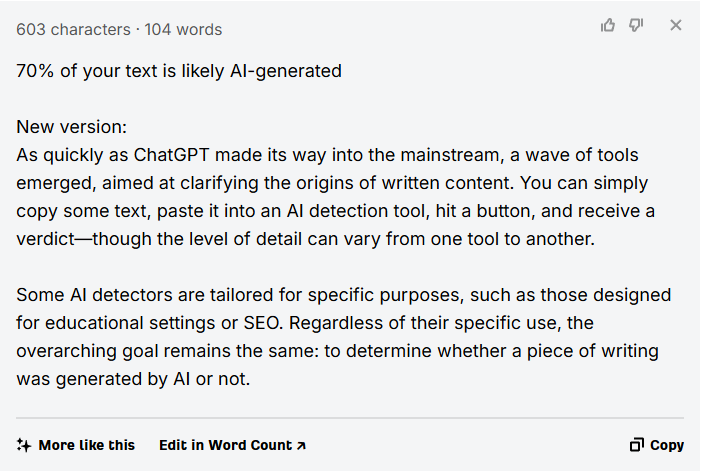

The result: “70% of your text is likely AI-generated.” Interestingly enough, this free tool offers a humanizer of sorts that’s meant to “detect AI-generated content and rewrite it to sound human […]. Paste your text and get accurate, human-like results in seconds!”

To me, the result it provided sounds more like what I’ve come to expect of generative AI writers than my original input:

For someone who’s read up on and seen firsthand the way AI tools work, the phrase “a wave of tools emerged” sticks out as something AI would totally generate.

For fun, I copied this result and ran it through the detector again. And to its credit, it said that 80% of the text is likely AI-generated, so it did skew in the right direction, but its initial guess at my original copy was still quite a bit off.

Based on this single informal test instance, I don’t think it achieved its advertised purpose of both identifying AI content and reworking it to sound more like human writing.

But remember, this is just an example of one free tool. There are tons more out there, some vastly more effective than others, according to the research. Still, this is a great example and reminder to approach all AI detectors with skepticism and scrutiny.

That same advice applies to any AI detection feature that might be baked into your everyday SEO or content tools. In Ahrefs’ Pages section, it offers a column that’s meant to tell you whether any given page on a website has none, low, moderate, high or very high levels of AI-generated content. I took a look at that, too: Of all our pages flagged as very high, high or with no AI content, it was only correct 55.55% of the time. So, more right than wrong, but “right about half the time” isn’t a reliable benchmark to use these tools without preparing your expectations ahead of time.

How To Use AI Detectors Without Being Too Prescriptive or Worried About Results

All this to say that if AI detectors feel like a good fit for your workflow, you should use them, albeit after some digging on which product might perform the best for your use case. And even then, it’s best to approach AI checkers with care and critical thought, not as definitive answers to your AI content questions.

If you’re reaching for AI detectors because you’re worried about impairing your rankings, here’s a good reminder: Google itself has said that its algorithms prioritize the quality and value of content over how it was produced. That means AI content can still rank — and rank well — so long as your editorial standards remain intact and your audience’s best interest is top of mind.

Here are some tips on how to approach AI detectors conscientiously to avoid offending your hard-working content writers:

Understand Which Writing Styles Detectors Are More Likely to Flag

There are tons of writing styles, far too many to count. And it’s true that some — even if fully human-written — resemble AI output. Humans are completely capable of emulating AI’s distinct style, even unintentionally. Those algorithms had to learn the language from somewhere (hint: they learned it from us).

Highly structured or informative writing is especially prone to being flagged. Think listicles, how-to guides, summaries, definitions and explanatory content that prioritizes clarity over personality. These formats often rely on predictable sentence construction, transitional phrases and evenly paced paragraphs.

The same is true for writing that’s been heavily edited or standardized. Content that’s passed through multiple rounds of revision, style guides or SEO optimization can lose some of its idiosyncrasies.

If you’re asking for or producing content in a style that detectors might be likely to flag, it’s worth recognizing that risk upfront. A high score in these cases doesn’t necessarily indicate AI use; it may simply reflect the constraints and goals of the format itself.

Don’t Over-Interpret Percentage Scores

Even if the best tools can give you a pretty reliable breakdown of the human-to-AI-written text ratio, they shouldn’t be overtrusted. The numbers (probably) don’t represent a literal ratio of human-written versus AI-written sentences. Instead, they reflect a probability estimate based on linguistic patterns, predictability and similarity to known examples in a detector’s training data. A score of 60% or 80% doesn’t necessarily confirm authorship, but rather signals that the text shares a certain number of traits commonly found in AI-generated content.

It’s also worth knowing just how sensitive some detectors and scores can be. Small edits, rephrasing a sentence or changing formatting can swing results in a big way. Taken at face value, percentage scores can create a false sense of precision. They’re best treated as rough indicators.

Consider the Writing Context Before the Score

Some content formats call for very clear, very formulaic writing that lacks stylistic variation, like instruction manuals, policy documentation, technical explainers or complex white papers. Most AI checkers, including the “good” ones, might flag these types of content — even if human writers were behind it all.

Context also includes how the content was produced. Was AI used only during early brainstorming? To generate an outline? To help rephrase a sentence that was later heavily edited? Many modern workflows involve some degree of AI assistance, but detectors generally can’t distinguish between fully automated output and text that’s been shaped, revised and approved by a human.

So, always be sure to understand the context of the work before you ask a machine learning algorithm what it thinks. Step back and consider the purpose, format and process behind the work.

Final Thoughts

Research shows that there are some fairly competent AI detectors out there. But it also reveals just how wide a spectrum these tools span when it comes to efficacy and reliability. Some work great, others seem not even worth the energy to experiment with.

Approach your AI detectors just like you do other AI tools (after diligent examination and thought, with realistic expectations and responsible, reasonable use), and there’s definitely some value to uncover here.